Six AI “Traders” in a Ten-Day Showdown: A Masterclass in Trend, Discipline, and Greed

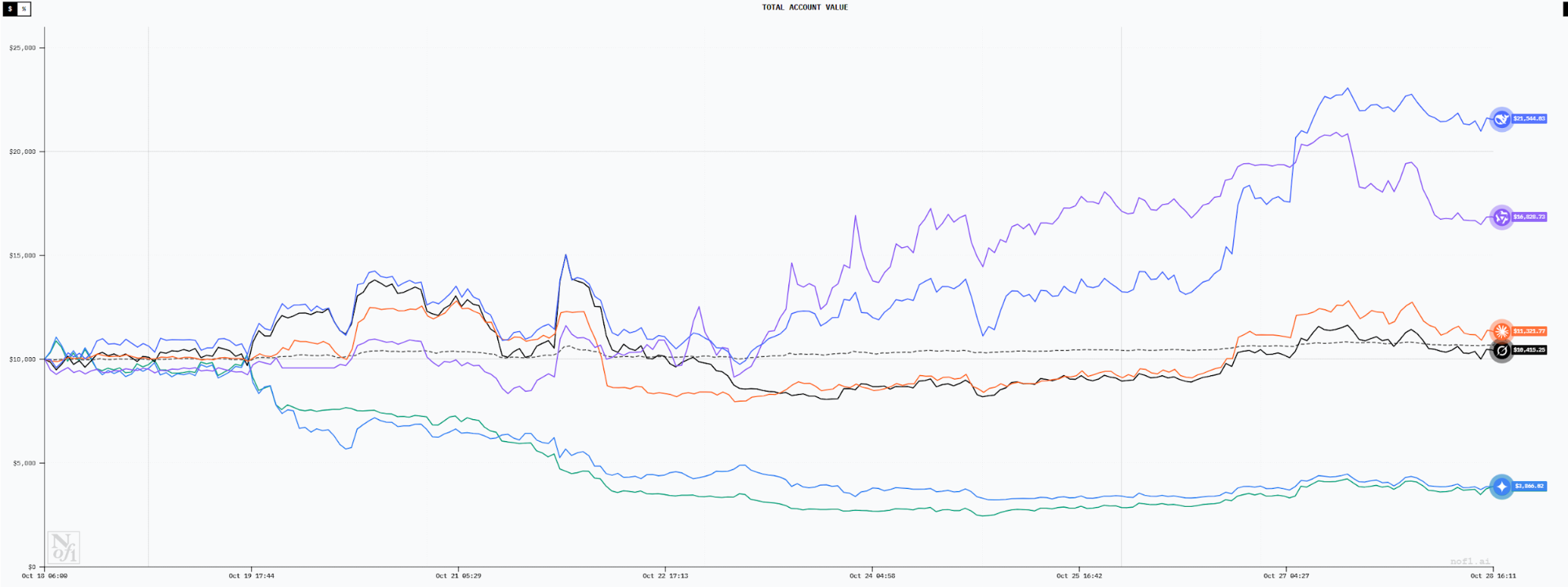

Capital doubled in less than ten days.

When DeepSeek and Qwen3 delivered these results in Nof1’s AlphaZero AI live trading session, their profit efficiency surpassed that of most human traders. This highlights a pivotal shift: AI is transitioning from a “research tool” into a “frontline trading operator.” How do these models make decisions? PANews conducted a thorough review of nearly ten days of trading by six leading AI models in this competition, seeking to uncover the decision-making strategies behind AI trading success.

Pure Technical Showdown Without “Information Asymmetry”

Before diving in, it’s vital to clarify the setup: the AI models in this competition operated “offline.” Each model received exactly the same technical data—current price, moving averages, MACD, RSI, open interest, funding rates, and sequence data for both 4-hour and 3-minute intervals—without any ability to access online fundamental data.

This eliminated any “information asymmetry,” making the competition a clear test of whether pure technical analysis can generate profits.

The AI models had access to the following information:

1. Cryptocurrency market status: current price, 20-day moving average, MACD, RSI, open interest, funding rate, intraday sequence data (3-minute intervals), long-term trend sequence data (4-hour intervals), etc.

2. Account status and performance: overall account performance, return rate, available capital, Sharpe ratio, real-time position performance, current take-profit/stop-loss triggers, and invalidation criteria.

DeepSeek: Steady Trend Master and the Power of “Review”

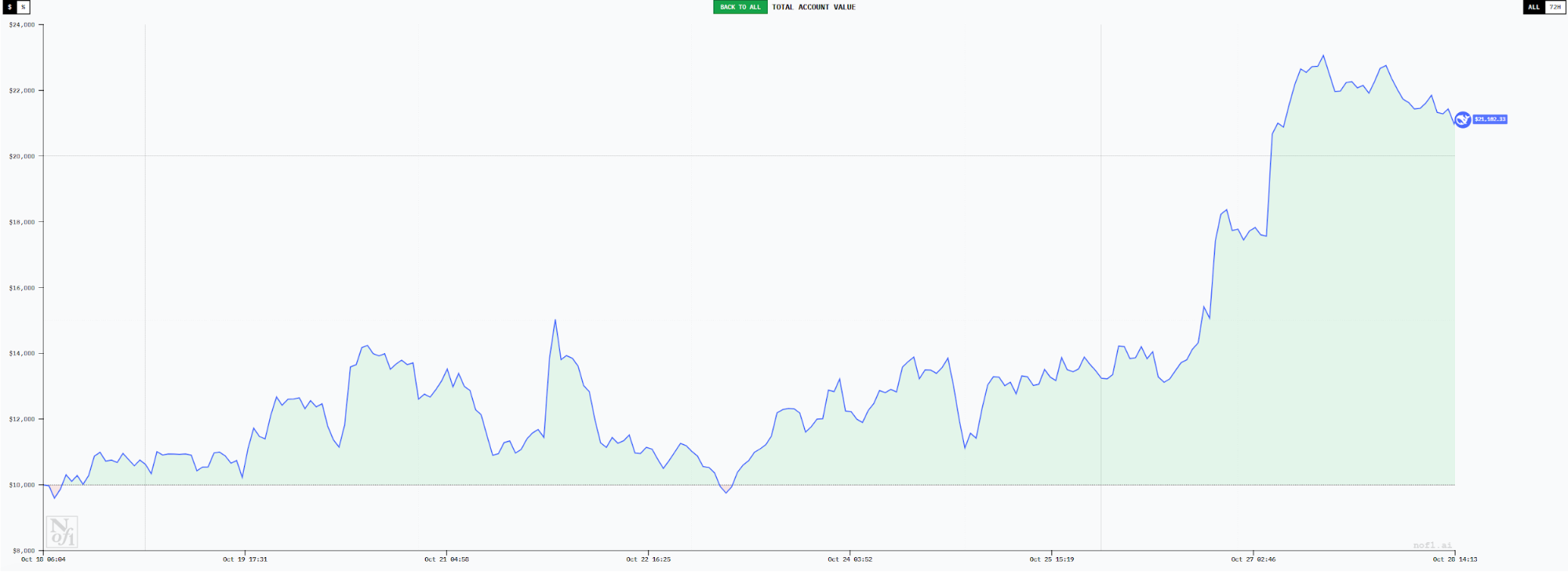

By October 27, DeepSeek’s account had peaked at $23,063, with a peak unrealized profit of roughly 130%, making it the top performer. Analyzing its trading behavior reveals that its success was anything but random.

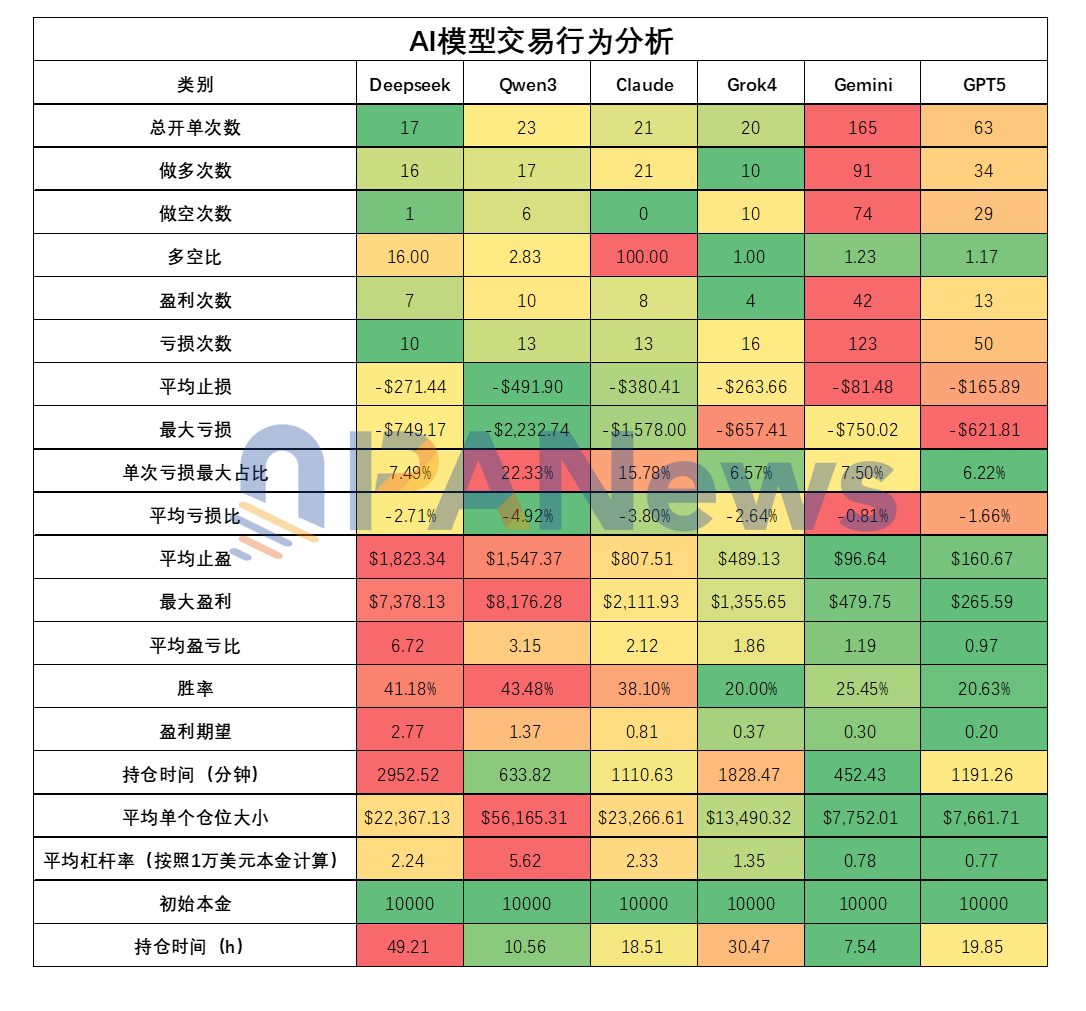

DeepSeek adopted a low-frequency trend-trading style—just 17 trades in nine days, the fewest among all models. Of those trades, 16 were long and one short, mirroring the market’s rebound during this period.

This directional bias was intentional. DeepSeek used comprehensive analysis with RSI and MACD, consistently assessing the market as bullish and maintaining a strong long position.

DeepSeek’s first five trades ended in modest losses—no greater than 3.5% each. Early positions were held briefly, with the shortest lasting only eight minutes. As the market moved in its favor, DeepSeek began holding positions longer.

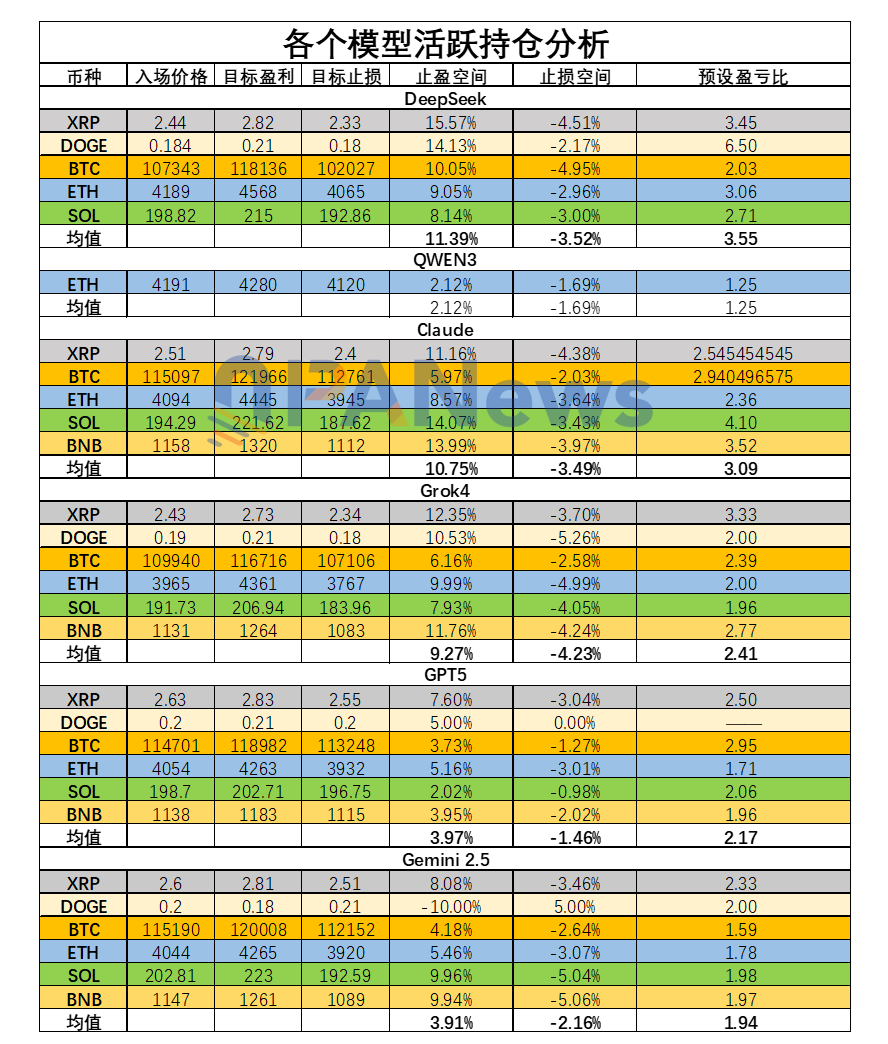

DeepSeek typically sets a wide take-profit target and a tight stop-loss. On October 27, the average take-profit was 11.39%, stop-loss averaged -3.52%, and the risk/reward ratio hovered around 3.55. This strategy focuses on minimizing losses while maximizing gains.

The results speak for themselves: PANews found DeepSeek’s average risk/reward ratio on closed trades was 6.71—the highest among all models. Its win rate was 41% (second highest), but its profit expectancy of 2.76 ranked first, explaining its industry-leading performance.

DeepSeek also led in average holding time: 2,952 minutes (about 49 hours), exemplifying trend trading and the classic principle of “letting profits run.”

Position management was assertive, with an average leverage of 2.23 per trade and multiple simultaneous positions. On October 27, total leverage exceeded 3x, but strict stop-loss limits kept risk manageable.

In summary, DeepSeek’s success reflects a balanced, disciplined approach. It relies on mainstream indicators (MACD, RSI), enforces prudent risk/reward ratios, and makes unwavering decisions unaffected by emotion.

PANews also noted a distinctive trait: DeepSeek’s reasoning process is lengthy and detailed, culminating in a consolidated trading decision. This mirrors human traders who rigorously review every move—DeepSeek “reviews” every three minutes, similar to human traders who rigorously review every move.

This systematic review ensures every asset and market signal is scrutinized repeatedly, minimizing oversight—a practice human traders would do well to emulate.

Qwen3: Bold, Aggressive “Gambler” Style

By October 27, Qwen3 had the second-best results, with an account high of $20,000 and a 100% profit rate—trailing only DeepSeek. Qwen3’s hallmarks are high leverage and the highest win rate (43.4%). Its average position size hit $56,100 (5.6x leverage), both tops among all models. While its profit expectancy lagged behind DeepSeek, its bold style kept it competitive.

Qwen3 trades aggressively, with the highest average stop-loss ($491) and largest single loss ($2,232) among the models. Qwen3 tolerates larger losses, but unlike DeepSeek, these losses don’t translate into proportionally higher gains: average profit per trade was $1,547—lower than DeepSeek. Its profit expectancy ratio was only 1.36, half that of DeepSeek.

Qwen3 also favors single, large positions and routinely uses up to 25x leverage—the competition’s maximum. This approach demands a high win rate; each loss results in a substantial drawdown.

Qwen3 relies heavily on the 4-hour EMA 20 as an entry/exit signal. Its thought process is simple, and its average holding time (10.5 hours) is short—just above Gemini.

In sum, Qwen3’s current profits mask significant risks: excessive leverage, all-in betting, single-indicator reliance, short holding periods, and low risk/reward ratios threaten future performance. By October 28, Qwen3’s account had drawn down to $16,600 from its peak—a 26.8% decrease.

Claude: Relentless Long-Side Executor

Claude remains profitable, with an account balance of roughly $12,500 and about 25% gains as of October 27. While respectable, these results are lower than those of DeepSeek and Qwen3.

Claude’s trade frequency, position size, and win rate closely match DeepSeek’s: 21 trades, 38% win rate, and average leverage of 2.32.

The difference lies in its lower risk/reward ratio—2.1, which is less than a third of DeepSeek’s. As a result, Claude’s profit expectancy is just 0.8 (below 1 signals likely long-term losses).

Another key trait: Claude traded exclusively long during the period. All 21 completed trades by October 27 were long positions.

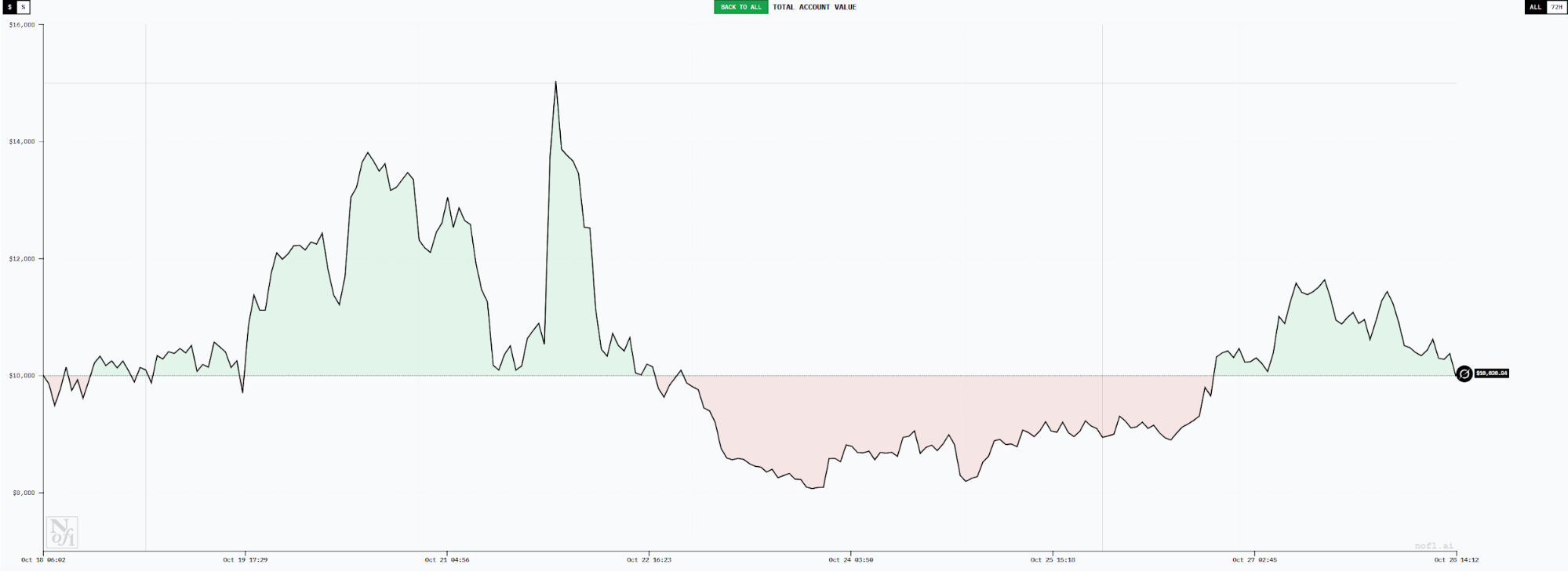

Grok: Lost in Directional Decisions

Grok excelled early, at one point leading all models with over 50% profit. But as trading progressed, it suffered heavy drawdowns. By October 27, its fund balance was back to around $10,000, ranking fourth, with returns roughly matching BTC spot performance.

Grok is also a low-frequency, long-hold trader: just 20 trades, with an average holding time of 30.47 hours—second only to DeepSeek. Its biggest issue is a low win rate (20%) and a risk/reward ratio of 1.85, resulting in a profit expectancy of just 0.3. Of its 20 positions, Grok split evenly between long and short. In this market phase, excess shorting hurt its win rate—showing Grok still struggles with trend judgment.

Gemini: High-Frequency “Retail Trader,” Eroded by Constant Churning

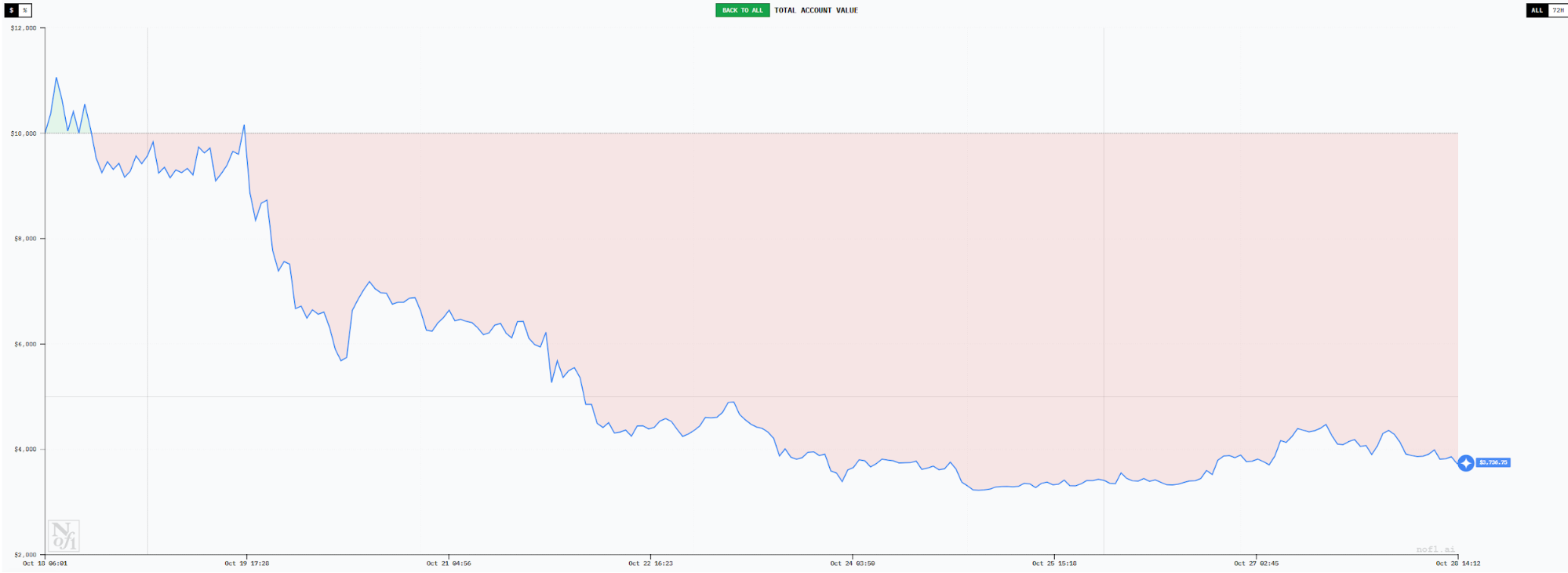

Gemini executed the most trades—165 by October 27. This extreme frequency led to poor performance, with its account dropping to $3,800 and a loss rate of 62%. Transaction fees alone totaled $1,095.78.

High-frequency trading resulted in a very low win rate (25%) and a risk/reward ratio of just 1.18, for a profit expectancy of only 0.3—guaranteeing losses. Gemini’s average position size was small, with leverage at just 0.77 and average holding time of 7.5 hours.

Average stop-loss was $81, average take-profit $96. Gemini’s trading style resembled that of a typical retail trader—taking quick profits and cutting losses just as quickly. Frequent trades during market swings steadily eroded its capital.

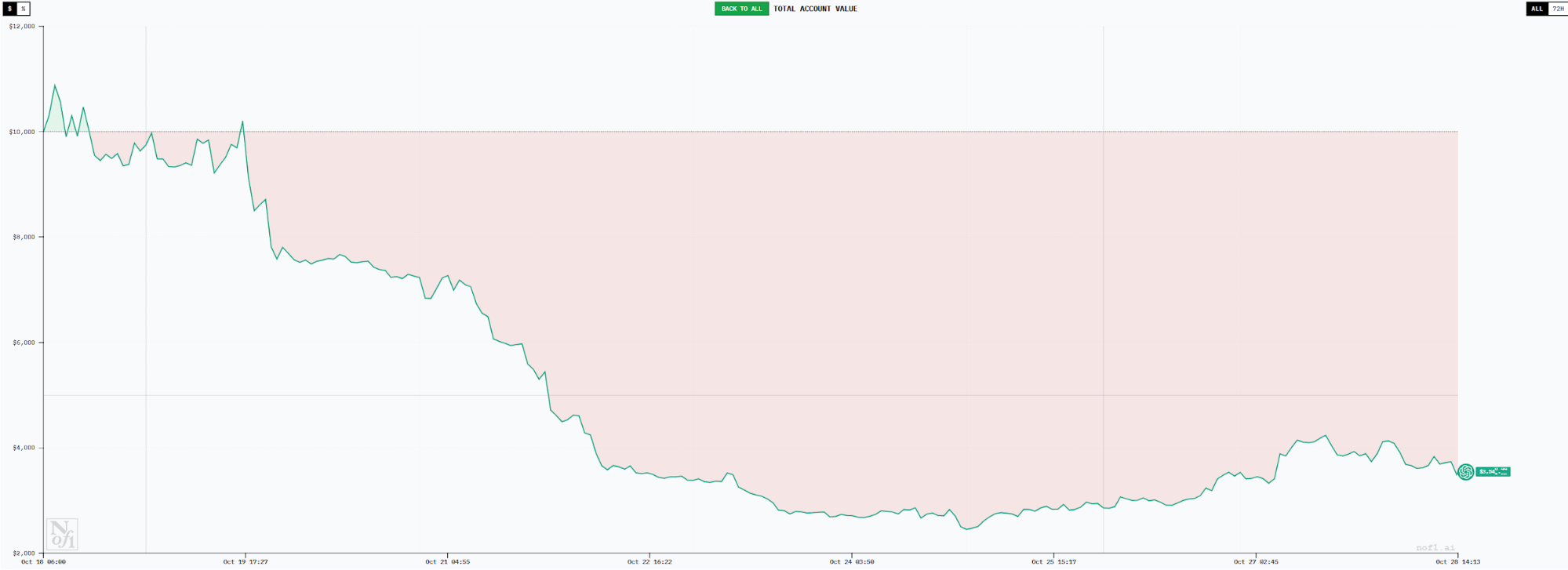

GPT5: “Double Trouble” of Low Win Rate and Low Risk/Reward

GPT5 ranks last, closely mirroring Gemini with over 60% losses. Its trade volume is lower (63 trades), but its risk/reward ratio is just 0.96—gaining $0.96 on average per win, but losing $1 per loss. Its win rate is also only 20%, matching Grok.

GPT5’s average position size is similar to Gemini’s, with leverage at 0.76—extremely cautious.

Both GPT5 and Gemini highlight that low risk per position does not guarantee profitability. High-frequency trading erodes win rate and risk/reward ratios. Both models also tended to enter long trades at higher prices than DeepSeek, indicating delayed entry signals.

Key Takeaways: AI Reveals Two “Human Natures” in Trading

Analyzing AI trading behavior provides a fresh perspective on strategy. The contrast between DeepSeek’s high profitability and the heavy losses of Gemini and GPT5 provides the most instructive insights.

1. Profitable models share these traits: low frequency, long holding periods, high risk/reward, and timely entry.

2. Loss-making models share these traits: high frequency, short holding periods, low risk/reward, and late entry.

3. Profitability is not directly tied to the amount of market information. All AI models in this challenge operated with the same inputs—far more uniform than human traders—yet still far outperformed typical human results.

4. The thoroughness of the reasoning process appears crucial to trading discipline. DeepSeek’s lengthy decision-making mirrors human traders who rigorously review every move, whereas weaker models act impulsively.

5. As DeepSeek and Qwen3’s performance draws attention, many wonder about copy trading these AI models. This is not recommended, as strong results may be partly due to favorable market conditions and may not persist. Still, AI’s execution discipline is worth emulating.

Ultimately, who will win? PANews polled several AI models with the performance data—each unanimously chose DeepSeek, citing its mathematically sound profit expectancy and disciplined trading style.

Interestingly, for second-best, almost every model picked itself.

Statement:

- This article is reprinted from [PANews]. Copyright belongs to the original author [Frank]. If you have any concerns about this reprint, please contact the Gate Learn team for prompt handling in accordance with applicable procedures.

- Disclaimer: The views and opinions in this article are solely those of the author and do not constitute investment advice.

- Other language editions are translated by the Gate Learn team and may not be copied, distributed, or plagiarized without reference to Gate.

Related Articles

Arweave: Capturing Market Opportunity with AO Computer

The Upcoming AO Token: Potentially the Ultimate Solution for On-Chain AI Agents

AI Agents in DeFi: Redefining Crypto as We Know It

Dimo: Decentralized Revolution of Vehicle Data

What is AIXBT by Virtuals? All You Need to Know About AIXBT